Will Autonomous Car Vision Systems Ever Replace the Human Eye?

| 30-01-2020 | By Paul Whytock

When it comes to maximising vision, technologists and nutritionist have a lot in common. Whereas the latter focuses on human eye health, electronics technologists see automotive vision systems as the current challenge in the development of autonomous vehicles.

But before delving into the technical intricacies and hurdles involving electronics-based vision systems like Lidar, radar and SWIR, what is the link between these and healthy eating?

We’ve all heard that eating carrots help you see in the dark and this happens to be true. Just like electronics-based vision systems rely on sensor signals being analysed by microprocessors so human vision relies on signals being transmitted for analysis to the human brain.

Simply put the beta-carotene in carrots helps us humans generate vitamin A and it's this vitamin that helps our eyes convert the multitude of light patterns we see into signals that are then transmitted to the brain which it then converts into vision.

So the challenge for those electronics physicist and engineers working on the development of vision system for autonomous cars is can they replicate the human system or perhaps make it even more efficient, particularly when it comes to low-light and poor visibility conditions?

Lidar

Innovations in Lidar and SWIR

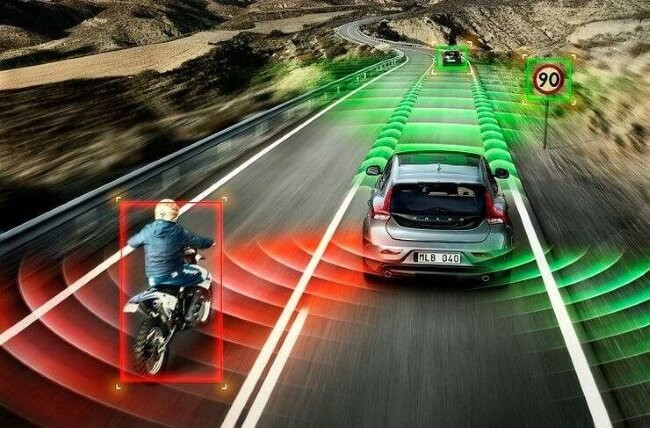

Let's take a look at the Lidar and SWIR approaches. Lidar (light detection and ranging) has a lot of similarities to radar in that it rapidly analyses bouncing back light signals to form a visual pattern on what's around it. Alternatively, SWIRs are short-wave infrared imaging sensor-based vision systems that have the advantage that they can see in low light and fog conditions. They are currently being looked at by German high-end sports car manufacturer Porsche.

So, the principle behind Lidar is pretty straightforward. Light travels extremely fast at about 210,000 miles/sec and consequently, a system that measures a bounce-back of light travelling at that speed needs to be pretty nippy.

Within Lidar systems are sensors that measure the time it takes for each light pulse to bounce-back and because light always travels at the same constant speed it's pretty straight forward mathematically to calculate the distance a car equipped with Lidar has between it and surrounding objects.

However, Lidar systems have had a considerable amount of criticism, not because of any technical inability but because of costs. Even autonomous car advocate Tesla’s Elon Musk has said that at around $70,000 they add too much cost to a vehicle. However a new player on the block, Luminar, recently announced Lidar solutions at a bargain-basement price of $1,000 and the company is working with some major car manufacturers like Toyota, Audi and VW on self-driving car designs.

Technology Elements

Now that price drop is significant given the technology that goes into Lidar. Most systems have three main technology elements.

Firstly there are lasers and these are typically between 600-1000nm wavelengths and the maximum output has to be rigorously controlled to make them safe for the human eye. Then there are the scanners and optical components involved. The speed at which images can be created by a system is directly proportional to the speed at which it can be scanned into the system. A variety of scanning methods are available for different purposes such as azimuth and elevation, dual oscillating plane mirrors, dual-axis scanner and polygonal mirrors. They type of optic affects the resolution and range that can be detected by a system.

Of primary importance is the technology that reads and records the signal being returned to the system. There are two types of photodetector technologies, solid-state detectors, such as silicon avalanche photodiodes and photomultipliers.

Obviously none of this technology comes cheap so claims of being able to massively reduce the overall cost of Lidar systems is interesting and of course may be related to the projected economy of scale which could be achieved if and when they become part of mass-produced autonomous cars.

Meanwhile over at Porsche studies are taking place to see if short-wave infrared imaging sensors (SWIRs) could prove the right vision choice for autonomous vehicles. Unlike Lidar and conventional radar systems, SWIRs are able to capture clear visual images in fog, dust and low light.

Israeli start-up TriEye, whose Short-Wave Infra-Red (SWIR) sensing technology enhances visibility in adverse weather and night-time conditions, announced a collaboration with the German sports car manufacturer Porsche, to further improve visibility and performance of Advanced Driver Assistance Systems (ADAS) and autonomous vehicles.

As ADAS systems are expected to operate under a wide range of scenarios, car manufacturers recognise the need to integrate advanced sensing solutions. Even when combining several sensing solutions such as radar, lidar and standard cameras, it is not always possible to accurately detect and identify all objects on the road when visibility is limited.

In order to address this particular challenge Porsche has identified TriEye’s CMOS-based SWIR camera as part of a system to achieve better visibility capabilities especially in adverse weather conditions.

So what is SWIR?

Short Wave Infrared refers to multi and hyperspectral data collected in the 1.4-3µm wavelength. SWIR is sometimes called reflected infrared or infrared light. It is electromagnetic radiation (EMR) with wavelengths longer than those of visible light and it is invisible to the human eye, although infrared (IR) at wavelengths up to 1050 nanometres from specially pulsed lasers can be seen by humans under certain conditions. IR wavelengths extend from the nominal red edge of the visible spectrum at 700 nanometres to one millimetre. Most of the thermal radiation emitted by objects near room temperature is infrared. Night-vision devices using active near-infrared illumination.

However, the real question is can these systems be relied on to totally replace the human eye and its very sophisticated microprocessor, the human brain? The answer at present is not entirely. Both systems have problems. Lidar cannot cope with poor weather conditions and SWIR cameras have challenges regarding the range they can operate at as well as having some weather challenges as well.

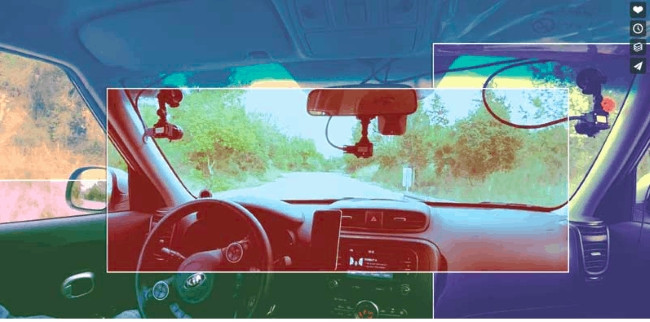

Ideally, we need to integrate the electronics vision technology with the eye and attention of the driver of the autonomous vehicle so the car switches between the two as required. However, to achieve this the human driver needs to be constantly monitored for those potential distractions which can have a dangerous effect on his driving.

In current road accident statistics, around 12% of people involved in car crashes are killed while 18% are injured.

So what counts as driver distraction relative to a drivers line of vision? Looking more than two seconds in a direction 30° sideways or up and down when the vehicle speed is more than 5 mph would be classified as distracted. There are also distractions involving smartphones, passing scenery, unruly children in the car, in fact, a multitude of things.

It’s impossible to monitor the driver for all of these in terms of his distraction but technology is available to detect if he may be getting drowsy. For instance, lane departure cameras that spot the vehicle is veering slightly out of lane position will, when it happens three times in quick succession, emit a screaming noise at the driver to wake him up.

There are also in-car infrared cameras that will catch drowsy drivers by detecting drooping eyelids and these technologies have reached a sophistication whereby they can be customised for different drivers in the same family

However, when an infrared camera is used that is looking at the driver to detect distraction, the LED light from the camera that is hitting the drivers face at 850nm can be red enough to be seen easily by most people – especially at night.

Disadvantages and Solutions

The problem is though if measures are taken to dim the light this, in turn, reduces the efficiency of the camera to detect driver distraction and poor images are the result.

A potential solution is to use higher wavelengths of 940nm although this makes the camera less sensitive but this can be handled by pulsing higher peak currents at lower duty cycle using a global shutter image sensor. The typical cameras used are 30 frames/sec and these are fast enough because a driver's gaze while driving does not change that often and that fast.

So when the systems detect driver drowsiness or distraction the autonomous vision systems can kick in to guide the autonomous car. Or so the theory goes. The problem as we know it is that it's going to take decades for all roads and autonomous car technology to be compatible with the multitudes of different driving situations.

And it's not just about consumers being confident that their autonomous car and its electronic vision systems are fail-safes. There is another big question that will discourage drivers from adopting the technology and that’s the law. If everything about what's happening with the driver and car is reordered on in-car cameras then those images could end up being used in a court of law against the driver.

So as good as autonomous cars and their vision systems and in-car driver surveillance systems get, the big question is will consumers ever really buy into them? There have already been suggestions that car owners should be able to disable in-car distraction surveillance cameras because of legal implications. This from a safety perspective would, of course, be complete nonsense.