Driverless Cars Need to Hear As Well As See What’s Coming

28-06-2021 | By Paul Whytock

If driverless cars are to become a reality they will have to have the same sight and sound abilities as humans. Until now we have heard plenty about technological breakthroughs regarding sight systems for the autonomous cars of the future but very little about ones that make cars hear.

Hearing is an extremely important factor when it comes to absorbing and analysing the surrounding information needed by us humans to make everyday driving decisions. Now I know immediately that some readers will say that today’s hard-of-hearing drivers get by and that’s true, but just like we have visual indication and alarms systems that tell the hard-of-hearing the front door needs answering or there’s a gas leak so future autonomous cars could have the same sound activated visual alarm systems for all drivers, not just the hard-of-hearing.

All that seems pretty sensible and there are good reasons why cars should have ears. But before looking into the why and how of that let me just say I don’t think totally autonomous cars will ever really happen.

Now, this is not me adopting an intransigent Luddite view but I just don’t think autonomous cars will ever achieve the broad-ranging independent flexibility provided by human-controlled vehicles. If however, you do, have a think about the following driving scenario.

An autonomous car’s sight system identifies a human walking across the fast lane of a major highway but the car is driving too fast to just brake to a halt. So the autonomous car has to make a decision to either try to stop in which case it will most likely injure or kill the pedestrian or it can take swift evasive action in which case it may hit another vehicle or go off-road possibly killing other pedestrians walking on the pavement. In both cases, it may also kill the occupants of the autonomous car. Are we really saying that cars will become smart enough and sufficiently moral in their outlook to decide who should live or die in that scenario?

But for now, the development of autonomous cars will undoubtedly continue and even with my somewhat jaundiced view of them that is a good thing. It drives forward many technological developments that should serve us well in various applications.

So smart cars need to hear things and hear them a lot better and sooner than us humans.

Today’s driverless cars see things via cameras, radar and Lidar so consequently objects need to be within the line of sight to be identified by the system. But what about emergency vehicles?

In a recent interesting experiment and data collection exercise performed in Phoenix, USA, Waymo, an autonomous driving technology development company and a subsidiary of Alphabet Incorporated, the parent company of Google, tested a fleet of driverless vans which had microphones developed by the company that let the vehicles hear sounds twice as far away as previous sensors while also letting them identify the direction of the sound.

The Waymo system was tested by spending a day with emergency vehicles whereby police cars, ambulances, fire engines chased, passed and led the Waymo vans through the day and into the night. Sensors aboard the vans recorded large quantities of data and built a database of all the sounds emergency vehicles make which will greatly assist autonomous vehicles by alerting the driver to various noises and also identifying them and prompting appropriate action by the vehicle.

In another vehicle hearing development, German chip maker Infineon Technologies has teamed up with edge computing and artificial intelligence specialists Reality AI and developed a sensing solution that provides vehicles with the ability to hear.

By adding XENSIV MEMS microphones to existing sensor systems, it enables cars to sense what’s around a corner and to warn about moving objects that are hidden from the autonomous car’s vision systems, things that are concealed ina blind spot or approaching emergency vehicles that are still too far away to see.

This new sensing technology is based on XENSIV MEMS microphones that are applied in conjunction with AURIX microcontrollers and Reality AI’s Automotive See-With-Sound system. Using machine learning algorithms the systems are particularly smart because they ensure that country-specific sirens of emergency vehicles are recognised globally.

The automotive-qualified XENSIV MEMS microphone IM67D130A has an increased operating temperature range from -40degC to +105degC which means it can cope with harsh automotive environments. The low distortions and the acoustic overload point of 130 dB SPL enable the microphone to capture distortion-free audio signals in loud environments. This is important because it enables reliable recognition of an emergency siren even if the siren sound is masked by loud background or high wind noise. This sound-based sensing technology can also enable other applications in vehicles such as road condition monitoring and damage detection.

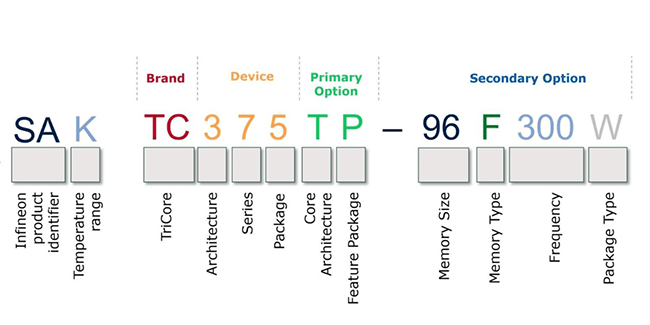

To process the received audio signal the Reality AI software employs Infineon’s AURIX TC3x family of microcontrollers which is used across multiple automotive applications. The scalable microcontroller family offers a range from one to six cores and up to 16MB of Flash with functional safety up to ASIL-D according to the ISO26262 2018 standard and EVITA full cybersecurity. The company claims the AURIX TC3x gives customers the performance and flexibility to implement XENSIV MEMS microphones in ADAS application areas.