Is zero chip defects a never-ending journey for car-makers?

| 15-06-2022 | By Paul Whytock

Car manufacturers must have semiconductor components to innovate and create better vehicles. It's a constant cycle of trying to be the most customer-attractive maker, and these days nearly all automotive evolution relies on electronic systems.

As a result of this reliance, semiconductors have become the fastest-growing component in modern vehicles, with cars containing up to £1200 worth of chips running anything up to 230 million lines of code. It's no surprise then that in 2019 nearly 50% of all vehicle recalls were because of defective electronic components.

Consequently, there is significant pressure from the car-makers to implement zero defect strategies in semiconductor manufacturing and whereas defects used to be measured in parts per million (ppm), they are now being measured in parts per billion (ppb).

This is understandable because of the obvious safety hazards involved if cars malfunction. But the challenge is finding the right economic balance between the amount of time, testing and money spent identifying faulty parts and how much this effort inflates the final price of the semiconductor components.

Car-makers may want zero defects, but they don't like paying more for the components, a cost that must be passed onto their customers in a very price-competitive business.

Conversely, car manufacturers never want the enormous cost of recalling thousands of vehicles because of defective systems caused by electronic failures.

Determining why a part has failed in the field involves a lot of analysis to determine if it's a single event or a costly mass-vehicle problem.

Fortunately, automotive components and systems have very complex product identity tracking systems, so the precise number of vehicles carrying a defect can be calculated.

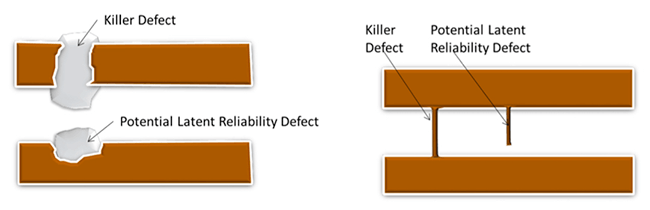

Of particular importance is the identifying of latent defects. These occur when a component operates normally and within specification immediately after manufacture but at some point during its operational life fails whilst in the vehicle.

Latent Defects

To eliminate latent defects is a huge ask and may not be entirely possible. Chip manufacturers can spot minuscule defects, but traditionally the emphasis has been on identifying contaminates during manufacture that threatens overall production yields.

This focus has to change if anything like zero-defect strategies will become a reality. The emphasis now has to be on spotting the actual contamination of the chips that could result in latent reliability failures.

A large proportion of chips used in vehicle manufacture are analog or mixed-signal components, and generally speaking, these are produced on well-established process methods.

This suited the corporate wallets of car-makers for some time as the price of such components has been very competitive.

Innovation pressure

However, when the pressure to innovate car design rears its demanding head. The car-makers look for increasingly complex semiconductor devices, and because of the more sophisticated process methods and intricate testing procedures to make these, the price per device rises. Just what the car-makers don't like.

But what they do like is a defect rate measured in parts-per-billion instead of the traditional parts-per-million.

As mentioned previously, this is where the fiscal balancing act comes in. To get anywhere near this quality level, semiconductor manufacturers employ statistical tools and techniques. One such tool is Gauge Repeatability and Reproducibility (GRR).

The technique involves studying a dataset generated by a sample of quality assured devices that meet specifications. These devices are tested continuously on one machine to measure repeatability and then tested by different machines to measure reproducibility.

The data is then analysed by software using a statistical methodology to calculate the variance from the quality assured group and to determine the cause of the variance.

Once the datasets are generated and statistically analysed, the final measurements are added as specification limits to the test parameters to ensure faulty devices are identified before they are shipped to the car-makers.

Linking GRR with other statistical tools powered by a yield management system is claimed to ensure the chip manufacturing process is capable of detecting defects per billion.

However, it is always the case that the electronics technology business will never settle for the status quo. Enter artificial intelligence (AI) into the car design arena. This is a concept that industry experts believe will increase the likelihood of creating or revealing chip defects.

The AI Challenge

The use of AI-driven systems in automotive mission-critical applications requires far greater computational power from semiconductor devices to be capable of processing the enormous quantities of related data. Some AI deep-learning chips being developed on very advanced nodes are being combined to boost processing power.

Intel, for example, is working on technologies that will put more computing power into chips by stacking tiles of devices in three dimensions. The company believes this could create ten times the connections between stacked tiles, which means more complex tiles may be stacked on top of each other.

When it comes to AI, the more computational power, the better, and this is particularly important in autonomous vehicles. But that also means an increase in the amount and complexity of device testing and the time it takes to do that testing to achieve the required device quality. And this doesn't come cheap.

So, where does all this leave the concept of zero defects, the car- makers and the semiconductor manufacturers?

Infinite Perfection, Really?

Firstly let's get real about this zero defects ambition. In reality, it's unachievable. Infinite perfection can never exist, but defect rates per billion can and engineers are making real progress towards meeting the car-makers desired quality level of 10 defects-per-billion semiconductor devices.

Chip architectural design will undoubtedly help with this, and one idea that shows great promise is the redundancy concept, whereby chip architectures will have several processors running simultaneously on one chip. These would be deployed in vehicle safety-critical applications and create a fail-safe system.

If one processor develops a latent defect during the vehicle's operation and fails, the remaining processors would seamlessly continue operating as usual, and any dangerous vehicle incidence would be avoided entirely.

The question is, could such fault-tolerant devices capable of handling the vast computational load of an AI-enabled car be created and quality-controlled at a cost-per-device, and would the auto-makers be prepared to pay?