GaN and SiC Transform AI Data Centers Efficiency

Insights | 24-07-2024 | By Gary Elinoff

The rising impact of Generative AI on data centers.

Key Takeaways about GaN and SiC Enhancing AI Data Centers:

- Data Centers now use roughly 2 to 3% of all the electricity generated worldwide.

- The amount of electricity used by Data Centers is about to skyrocket, largely due to the demands of Artificial Intelligence.

- In order to increase data center efficiency and thereby cut down on carbon emissions, data centers will increasingly rely on Wide Bandgap Semiconductors.

Introduction

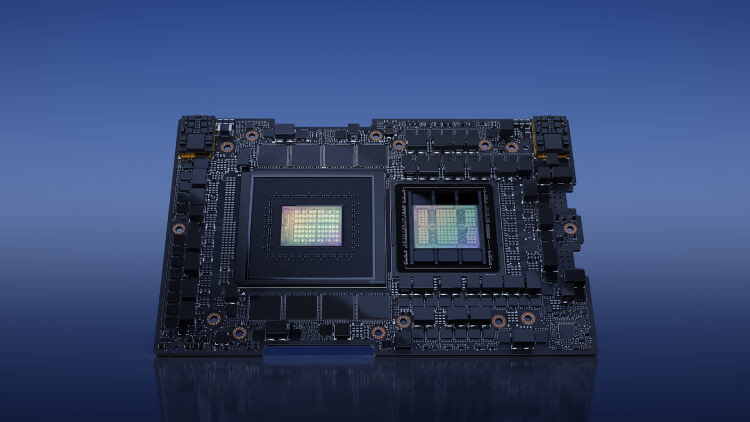

Artificial Intelligence is the biggest news in technology since the advent of the internet itself. And, just as human intelligence must be “trained” in order to accomplish practical tasks, so too must AI. That training takes place within data centers, employing “teaching” chips such as NVIDIA’s Grace Hooper Superchip, which require a tremendous amount of energy, at very low voltage and at extraordinary levels of current.

NVIDIA’s Grace Hopper Superchip uses as much as 2 kW of power. Image source: NVIDIA

This is placing unprecedented demands on an industry already straining under its current load. If not addressed, the rapid growth of AI could face significant power shortages, halting its progress.

The demand for AI applications is intensifying, with data centers needing more efficient power solutions. According to GaN Systems, replacing silicon semiconductors with GaN can significantly enhance efficiency, reducing energy wastage and operational costs.

Just how will this affect the demand for electricity presented by the need to accommodate AI? As noted by Paul Weiner, VP of Strategic Marketing Infineon[1] (formerly GaN Systems), a ChatGPT session will cause a data center “50 to 100 times more energy than a similar Google search”. As he notes, present-day data center infrastructure is simply not up to the job.

Paul Wiener highlights that the adoption of GaN technology can help mitigate these energy demands by improving power conversion efficiency and reducing heat generation within data centers. As stated by Mr. Weiner, ”The digital world is undergoing a massive transformation powered by the convergence of two major trends: an insatiable demand for real-time insights from data, and the rapid advancement of Generative artificial intelligence (AI). Leaders like Amazon, Microsoft, and Google are in a high-stakes race to deploy Generative AI to drive innovation. Bloomberg Intelligence predicts that the Generative AI market will grow at a staggering 42% year over year in the next decade, from $40 billion in 2022 to $1.3 trillion.”

Power Use Effectiveness (PUE) – a Misleading Standard

PUE is a standard used to measure the electrical efficiency of a data center. As described in an article by GaN Systems’ (now Infineon) Julian Stypes[2], “PUE is determined by dividing the total amount of power entering a data center by the power used to run the IT equipment within it.”

Simple, isn’t it? But as it turns out, it is too simple because it completely ignores the energy loss undergone by converting the grid’s 240 AC to the lower voltage DC, which is the starting point for a data center’s power usage. This conversion, using classical silicon-based semiconductors, can be as high as 90%. Impressive, but you still lose 10% of the power to heat. And that heat needs to be removed, which will require the expenditure of still more energy – more about that later.

Gallium Nitride (GaN) and Silicon Carbide (SiC) are members of a new class of electronic components known as Wide Bandgap Semiconductors (WGB). Using modern GaN semiconductors, efficiencies in the range of 96% or better are readily available. And, as Styles points out, there will be more voltage conversions necessary within the data center that can be accomplished more efficiently through the use of GaN, saving more power still.

By adopting GaN-based solutions, data centers can achieve higher operational efficiency. This approach addresses the PUE loophole, ensuring energy savings at multiple conversion stages within the infrastructure.

As mentioned by Wolfspeed, using SiC MOSFETs in data center cooling systems can achieve up to 98.6% efficiency at full load, demonstrating substantial gains in performance and energy conservation.

Wide Bandgap Semiconductors – How Do They Do It?

Wide Bandgap Semiconductors (WBG) enjoy three characteristics that set them apart from last-generation silicon-based semiconductors:

- Most electronic devices, including servers, make multiple voltage conversions; these include AC to DC, DC to AC, as well as multiple changes in voltage levels. The process of making these conversions center around the “sampling” of the source voltage many thousands of times per second; the faster the better. WGBs can achieve a far greater rate of this sampling than can silicon semiconductors.

- Semiconductors switch from “ON” to “OFF” to effect this sampling. WGBs achieve this “switching” with far less internal resistance than the old silicon devices, so less power is wasted in the form of heat.

- WGBs can not only operate at higher temperatures, but they can also dissipate that heat more expeditiously, a boon to designers.

According to Infineon, the implementation of SiC in data center cooling systems can lead to a reduction of up to 50% in system losses, significantly improving overall energy efficiency.

Articles previously published in Electropages cover these topics in great detail. These articles include Power Electronics in EVs: Key Roles and Challenges, Silicon Carbide and Gallium Nitride for EV Power Efficiency, and The Evolution of GaN in Consumer Electronics: Key Insights.

The Power Flow Within a Data Center

In an Infineon Video[3], the company’s Gerald Deboy describes how power flows within a data center. The first stage converts AC power from the grid to 48 VDC, which goes to the rack, which houses individual server boards. It is here that SiC devices, which can handle more power than GaN, come into play.

A Data Center Server Rack houses multiple server boards. Image source: NavePoint

As Carl Smith points out in another Infineon video[4], the old 12V standard was OK for classical servers, but not so much now for Data Centers serving AI applications, with each rack now taking up to 100 kW. Because power lost on a backplane is proportional to the current squared, quadrupling the current would cause 16 times the power loss – and 16 times the parasitic heat would be generated. Hence, the adaptation of the 48VDC standard.

Incorporating vertical power delivery techniques can further streamline power distribution within data centers, minimising energy losses and enhancing system reliability, as highlighted by Infineon experts.

Optimising power flow with 48V architectures, as demonstrated by Infineon, can enhance efficiency and reduce power losses. This approach is crucial for meeting the high energy demands of AI applications in modern data centers.

The next steps include intermediate bus converters to lower the 48V to levels needed to power various rack and server subsystems. But the story doesn’t end here, as there is still another conversion to be made. That’s because the AI superchip itself needs to be fed with a voltage of less than 1VDC at currents, often in the range of 2000 amps.

With current this high, the I2R rule again raises its head, and to avoid generating still more parasitic heat, Mr. Smith describes the last inch power delivery network (PDN). This involves an innovative vertical mounting technique that places the final power converter on the other side of the PCB from the AI super chip – the shortest pathway possible.

Cooling Losses Ameliorated through the use of SiC

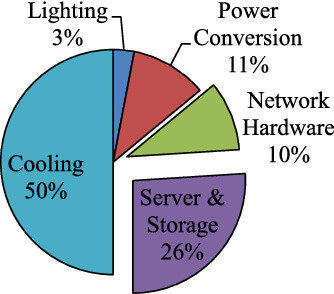

As far as data centers are concerned, cooling the system is clearly the elephant in the room. According to X-trinsic’s Robert Rhoades[5], ”Within a data center, cooling systems account for ~1/2 of all power consumed”.

Typical Power Uses in a Data Center. Image source: Semi

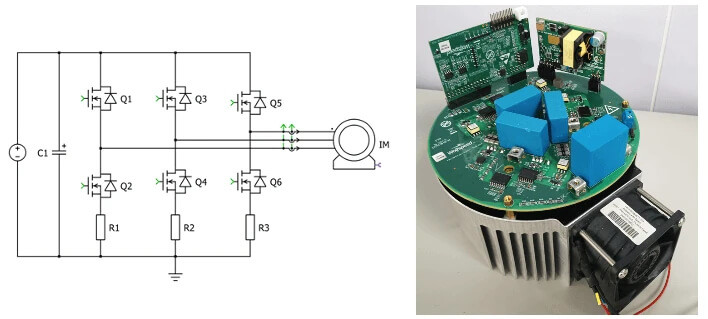

An article published by Wolfspeed[6], places the percentage at a somewhat lower 20 to 45% range. The company’s SiC based 11 kw Three-Phase Motor Drive Inverter is claimed to be 98.6% efficiency at full load.

11 kW Three-Phase Motor Drive Inverter with 1200 V SiC MOSFET. Image source: Wolfspeed

Due to the efficiencies gained through the use of SiC, the cooling system itself is more efficient, which allows designers to employ smaller heat sinks, and to deploy cooling systems that themselves take up less space, always an advantage when dealing with tight data center physical spaces.

Challenges and Opportunities

A 5% increase in efficiency gained by using a WBG is worth more than 5% because it also means that less parasitic heat is generated. That, in turn, means that less cooling will be required, and cooling costs additional energy, lowering overall system efficiency.

As this analysis describes, there are multiple voltage transitions required by a functioning data center with its multiple racks, each with multiple servers. Each transition represents some inefficiency, however small, and each inefficiency will generate heat that must be dealt with.

It’s easy to think that increasing the already high efficiency achieved by WBG chips is hardly worth bothering with. That might be true if the small amount of electricity wasted would just evaporate like money wastefully spent on a Saturday night. But it doesn’t, instead manifesting itself as heat, which has the potential to degrade system performance or to shut the data center down completely unless it’s dealt with. And, the easiest way to deal with it is to not generate it in the first place.

The key to not generating parasitic heat is by using more and more efficient chips in the many layers of voltage converters necessary for data center operation.

That’s why it’s imperative, as the scope of server based AI continues to expand without limit and AI superchips continue to demand more and more power, that there be no letup in the design and manufacture of still better WGBs.

Wrapping Up

The demands of server-based generative AI will place far greater demands on data centers than previous tasks such as google searches or video streaming. The unprecedented power requirements of present and future data centers presents a “supply chain” dilemma similar to that of an army. No food and ammo – no army movement, and in a similar manner, absent or inefficient electrical power will stop the process of server-based AI dead in its tracks.

The development and availability of WGBs allows each of the many electrical voltage conversions necessary for data center operation to function with greater efficiency. Going forward, the power demands will be enormous, and the old 12V standard is no longer adequate. This is actually quite similar to the move from 400 volts to 800 volts in EVs for the same I2R reasoning.

The increase in efficiencies that WGBs exhibit is doubly important. Not only are there the initial few percentage points of savings, but the power saved, nipped in the bud, never gets a chance to produce unwanted heat. And because the heat was never produced, the energy that would have otherwise been required to remove it is instead saved, further improving the electrical efficiency of the data center.

References:

- AI in Data Centers: Increasing Power Efficiency with GaN: https://gansystems.com/newsroom/ai-in-data-centers-increasing-power-efficiency-with-gan/

- GaN: Closing the data center “PUE Loophole.”: https://www.datacenterdynamics.com/en/opinions/gan-closing-the-data-center-pue-loophole/

- The power of efficiency: the sweet spot for Si, SiC, and GaN in data centers: https://www.youtube.com/watch?v=7hfUbNhBUT8

- How to optimize AI server power flow with 48V architectures and vertical power delivery: https://www.youtube.com/watch?v=GTQU1W8hFhc

- SiC Market Trends & Perspectives: https://www.semi.org/sites/semi.org/files/2024-04/SEMI%20Webinar%20-%20Rhoades%2020240403%20SiC%20Market.pdf

- Design Next-Generation Data Center Cooling Systems with Silicon Carbide: https://www.wolfspeed.com/knowledge-center/article/design-next-generation-data-center-cooling-systems-with-silicon-carbide/

Glossary of Key Terms:

- WGB. Wide bandgap semiconductors, starring silicon carbide and gallium nitride.

- Data Center Rack. A metallic cabinet that houses several individual servers, cables and associated equipment