Next-Generation Spatial Awareness: Driving the Future of Vehicle Safety

08-12-2023 | By Mark Patrick

The rate of change in the automotive industry is incredibly high. Propulsion systems are moving to sustainable fuels, and increasingly, vehicles are aiding the driver through advanced driver-assistance systems (ADAS). These systems warn of hazards, and advanced versions can apply the brakes or even manoeuvre the steering if the driver does not act in time.

For these systems to operate, the vehicle must be aware of its position and, more importantly, its surroundings. Hazards such as objects, pedestrians and other vehicles must be identified rapidly and accurately so the correct action can be taken in all weather and lighting conditions.

While these advances are largely driven by the automotive industry as it responds to consumer demands for greater safety in vehicles, governments and road safety organisations are getting in on the act as well, adding legislation and penalising vehicles without these features in their safety ratings.

Increasingly, the technologies used by vehicles to detect their external surroundings are also being deployed inside the cabin to detect and monitor passengers, delivering higher levels of safety and convenience.

Vehicle Autonomy

It is generally accepted that the vast majority of accidents occur due to drivers’ poor decision-making or inattentiveness. Therefore, supporting the driver with an ADAS that can scan the environment for hazards and provide a warning is an effective way to enhance road safety.

Multiple technologies are available that can provide the spatial awareness required for ADAS functions. The most commonly used include:

- lidar,

- radar, and

- image-sensor-based cameras.

Other technologies, such as ultrasonic, are exclusively used for parking distance control (PDC) due to their limited range.

Lidar scans the scene, and measures reflected light to detect objects. In good weather, it can deliver high resolution over a long distance, but this is curtailed in conditions such as heavy rain or thick fog that scatter the lidar beam. It can provide three-dimensional information about objects, which is valuable in calculating size.

Radar operates in a similar way to lidar by replacing the light with radio waves. These waves bounce against objects and reflect back into a vehicle-mounted receiver. Processing these reflections allows ADAS to determine the distance, speed and direction of objects within range of the radar. Compared to lidar, the resolution of radar is lower, but it is not affected by poor weather.

Radar has evolved from the early incarnations, with modern technologies such as Texas Instruments’ mmWave sensors offering spatial and velocity resolution that are three times that of traditional solutions. When used for forward-facing radar, TI AWR mmWave sensors can detect objects moving at up to 300km/h at <1 degree angular resolution.

Image sensors also form an integral part of an ADAS. While they are also affected by weather and challenging high-dynamic scenes such as sunsets and oncoming headlights, the latest automotive image solutions feature incredible sensitivity with up to and beyond 140dB of HDR capability. This ensures both the dark and light sections of a scene are accurately captured, allowing the control systems to correctly interpret the road ahead, distinguish pedestrians and vehicles from the surroundings and act if needed. Compared to radar or lidar, high-resolution image sensors can also read speed limit signs, providing valuable input to an ADAS.

As each of the sensing modalities has its strengths and weaknesses relative to the others, it is common for the data from each type of sensor to be combined through sensor fusion. In this way, the system achieves the best possible picture of the road ahead in all weather and lighting conditions. Sensor fusion also provides a degree of redundancy, which is highly valuable in systems that are relied on so heavily.

The value of the features provided by ADAS is being recognised as making a significant contribution to road safety. EuroNCAP has now incorporated specific pedestrian accident scenarios into its testing to evaluate the ability of the ADAS in real-world scenarios. Similarly, they have added a total of six scenarios to test autonomous emergency braking (AEB) in real-world situations.

Earlier in 2023, the US National Highway Traffic Safety Administration (NHTSA) announced a proposal that would make pedestrian detection and AEB a mandatory feature on passenger cars and light trucks. The intention is to reduce collisions with pedestrians as well as rear-end impacts. In its statement the NHTSA estimated this would save one life per day and would prevent around 2,000 injuries per month.

Monitoring Inside the Cabin

The technologies that monitor the world outside the vehicle are increasingly being turned inside the cabin as well, primarily to enhance safety. As driver inattention is a primary cause of accidents, some vehicles now have a camera focused on the driver’s eyes. This allows safety systems to detect early signs of sleep and also to see if a driver is looking elsewhere as the vehicle approaches a static vehicle at speed, allowing the AEB to be applied earlier than if the driver was paying attention.

The technology is also being used to enhance passenger safety. As passengers vary in size, a one-size-fits-all approach to airbag deployment is sub-optimal. At the simplest level, determining which seats are occupied allows only relevant airbags to be deployed. However, using an image sensor can provide information about the size of the passenger, which can be used to adjust the deployment force of the airbag to an appropriate level.

Another cabin-related issue that has recently come into focus is that of heatstroke and heat-related deaths, especially in infants who are left in closed vehicles, whether intentionally or inadvertently. In-cabin image sensors can recognise people and distinguish between a soft toy and an infant— although if the infant is under a blanket, then recognition may not be possible. For this reason, mmWave technology that can offer 100-micron precision is a preferred solution. With this accuracy, living things (i.e., humans and pets) can be detected by the movement of their breathing, even when covered.

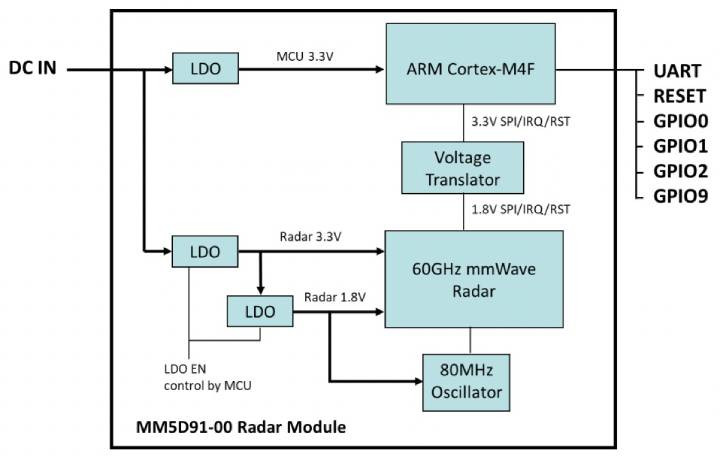

To simplify the design process of such solutions, the MM5D91-00 60GHz presence detection sensor module from Jorjin Technologies contains everything needed to create an operational prototype. It has an onboard regulator, one transmit antenna, three receive antennas and an Arm® Cortex-M4F-based processor system.

Gesture Control

Monitoring the driver for distraction is beneficial, but many would argue that eliminating the distractions is a better approach. One of the most common distractions is fumbling for a small button on the dashboard or trying to delve into a multi-layer menu within an infotainment system while driving.

Cognisant of these issues, some automakers are introducing human-machine interfaces (HMI) that allow the driver to make gestures to control the functions of the vehicle. In some cases, gesture control augments the existing physical controls, although the technology may eventually replace buttons and touchscreens.

Based upon time-of-flight (ToF) technology, such as the STMicroelectronics VL53L8CH ToF sensor, a typical gesture recognition system will integrate an infrared (IR) light source to ensure good performance in strong ambient light. The system will also include a single-photon avalanche diode (SPAD) detection array to detect reflected light.

From this, the system can create a depth map of a hand placed in its field-of-view (FoV) and deduce a command from pre-configured movements. For example, an open palm commonly means ‘stop’ while an upward movement might scroll a menu upwards.

With a system of this type, the driver no longer has to search for a specific button or menu option, as commands only rely on a gesture within a fairly broad FoV. Thus, the ability to concentrate solely on the road ahead is greatly enhanced.

Conclusion

Spatial awareness will be a key element of future vehicles, eventually allowing them to operate with full autonomy. Even now, ADAS rely upon hazard perception to warn the driver of obstacles in their path.

Multiple technologies exist, including lidar, radar and image sensors. While their relative merits can be debated. Clearly, the best solution comes from combining these modalities through sensor fusion.

More recently, spatial awareness has become important within the cabin. It allows airbags to be deployed based on the passengers present and to detect infants left behind in a car. Related technologies such as ToF are bringing gesture control to the driver, removing one more distraction and keeping our roads safer.