The Evolution of Automotive Radars into Super Sensors

15-05-2024 | By Hwee Yng Yeo

Electropages exclusively presents an insightful article by Hwee Yng Yeo, E-Mobility Advocate and Industry & Solutions Marketing Manager at Keysight Technologies, on the pivotal role of automotive radar technology in advancing autonomous vehicle safety. This article explores the historical evolution, current innovations, and the promising future of radar systems, crucial for enhancing the precision and reliability of self-driving cars.

Beyond What Meets the Eye

Self-driving technology is likened to training the autonomous vehicle (AV) to drive like humans – or hopefully better than humans. Just as humans rely on their senses and cognitive responses for driving, sensor technology is an indispensable part of autonomous driving enablement.

Among camera, radar, and lidar sensors, radar has perhaps the longest history in aiding transportation safety. One of the very first patented radar technology used in transportation safety was the “telemobiloscope.” It was created by German inventor Christian Hülsmeyer as a collision avoidance tool for ships.

Radar technology has since come a long way and is now an important enabler of automotive safety features, with a sizable market estimated at over US$5 billion in 2023.

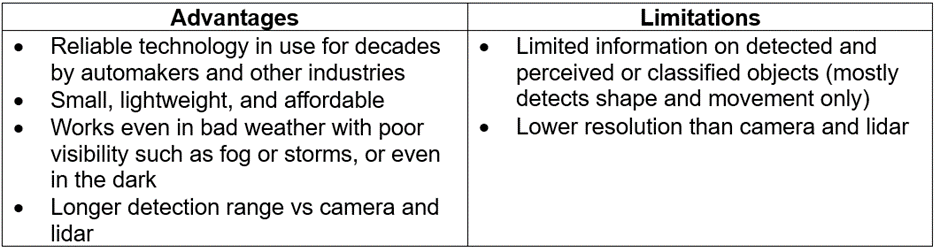

Automotive radar offers several advantages, as listed in Table 1. These advantages continue to help adoption by engineers for advanced driving assistance systems (ADAS). Radars in modern vehicles enable automatic emergency braking systems, forward collision warning, blind spot detection, lane change assist, rear collision warning system, adaptive high-speed cruise control on highways, and stop-and-go in bumper-to-bumper traffic.

Table 1. Advantages and current limitations of automotive radar technology

While automotive radar technology offers many advantages, engineers are pushing the boundaries to break through its limitations. Over the years, refining resolution remains a key challenge, but innovations in recent years are leading to radars that can provide more granularity in terms of object detection.

Differentiating in 3D

Conventional 3D automotive radar sensors use radio frequencies to detect objects in 3D: distance, position, and Doppler, or velocity of the object. To move automotive radar sensors up the safety value chain to aid autonomous driving, the industry continues to break through the limitations of 3D radar. Since 2022, both Europe and the U.S. phased out the use of 24 GHz ultra-wideband (UWB) radar frequencies from 21.65 GHz to 26.65 GHz due to spectrum regulations and standards developed by the European Telecommunications Standards Institute (ETSI) and Federal Communications Commission (FCC).

In phasing out the 24 GHz UWB spectrum, the regulating authorities opened up a contiguous 5 GHz band of spectrum frequencies from 76 GHz to 81 GHz for vehicular radar technologies. While the 76 GHz band is used for long-range detection, the 77 – 81 GHz band is used for short-range, high-precision detection.

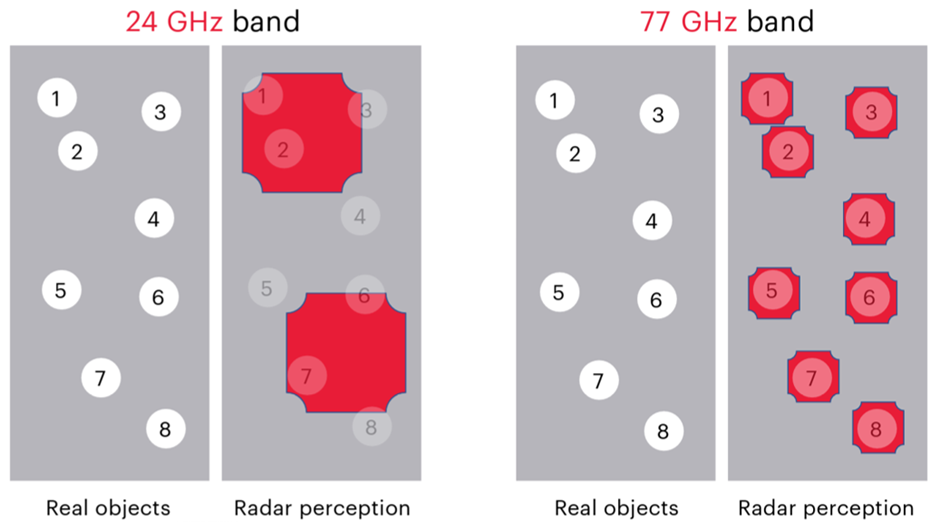

The higher frequency and wider bandwidth of advanced automotive radar systems help to enhance the radar’s range resolution, which determines how far apart objects need to be before they are distinguishable as two objects. For instance, in a 77 GHz radar system, two objects need only be 4 cm apart for the radar to distinguish them as individual objects, versus the older 24 GHz system, where the objects need to be at least 75 cm apart for the radar to detect them as distinct objects (Figure 1).

Figure 1. The 24 GHz radar (left) cannot distinguish individual objects that are too close, versus radar sensors operating in the 77 GHz band (right), which identify the targets as distinct objects.

The ability of automotive radars to tell objects apart is important. Imagine a girl and her dog standing together by the curb. A human driver can, under most circumstances, easily recognise the scenario and most likely pre-empt any sudden moves by the dog. However, only the automotive radar sensor with a wider bandwidth can detect both girl and dog separately and provide the correct information to the autonomous driving system (see Figure 2).

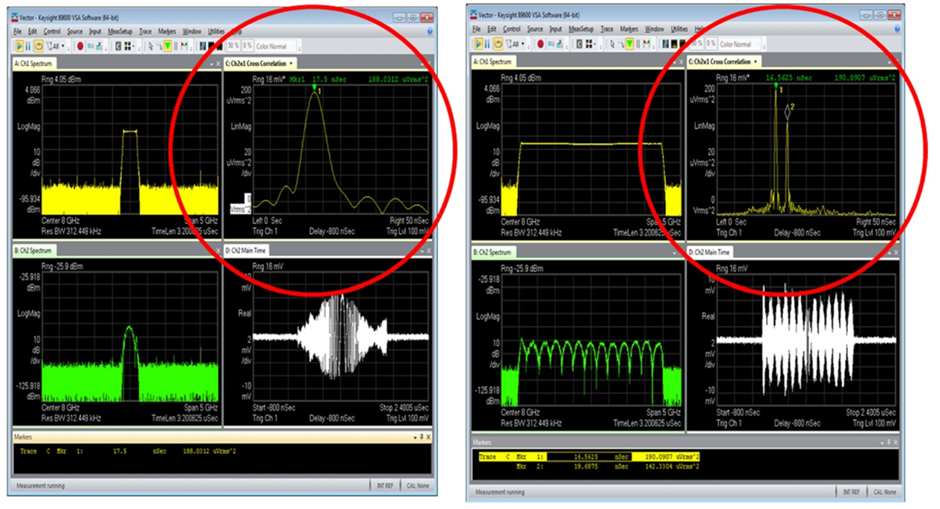

Figure 2. Test comparison between 1 GHz (left) and 4 GHz (right) bandwidths shows that only the wider bandwidth on the right can detect two different objects.

Navigating More Safely with 4D Radars and Beyond

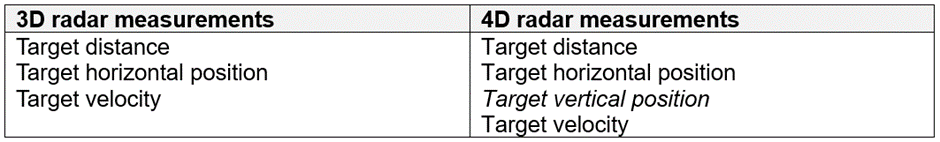

As humans hand over the steering wheel to the AV, the radar sensory technology must be able to provide accurate capabilities to detect, classify, and track objects in the vehicle’s surrounding environment. This need is spurring the development of 4D radars which provide more accurate and detailed information about objects in 3D space including their vertical positions, on top of the distance, horizontal positions, and velocities that are already reported by 3D radars (see Table 2).

Table 2. Difference between 3D and 4D radar

The emergence of 4D imaging radars means autonomous vehicles can detect smaller objects with greater resolution, with the imaging radar providing a more complete “all-around” environmental mapping.

The ability to detect the height of objects with 4D and imaging radars is essential for autonomous vehicles to interpret objects in the vertical perspective correctly. For example, an AV’s 3D radar may erroneously detect signals bouncing off a flat manhole cover as an obstacle on the road and come to a sudden stop to avert a non-existent obstacle.

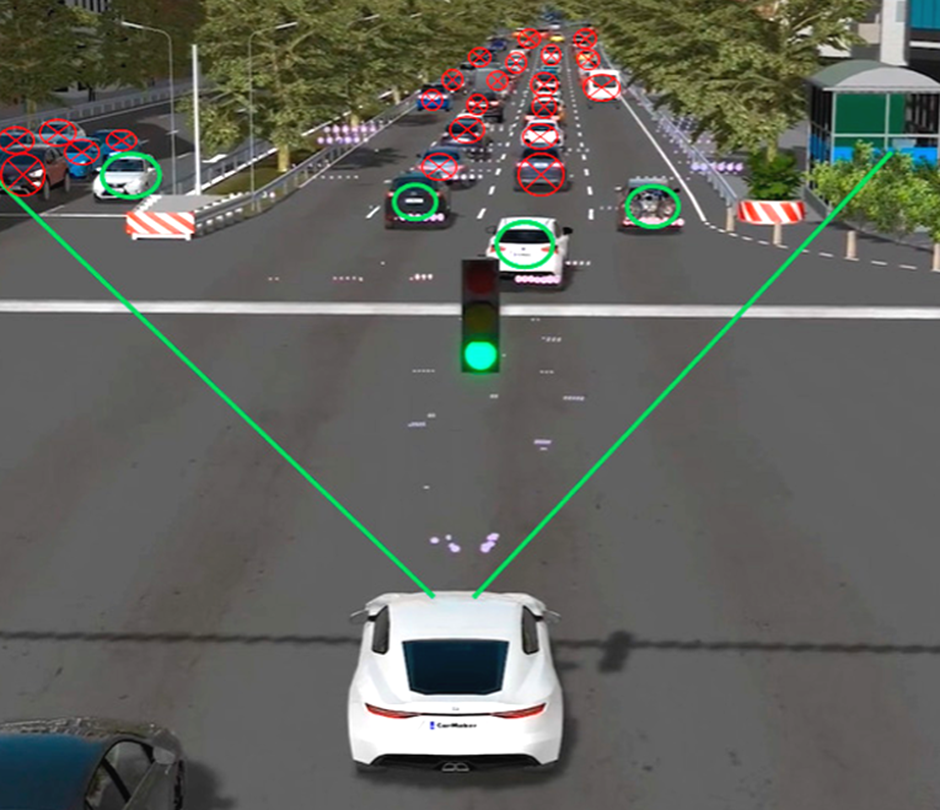

In the real world, traffic “events” that automotive radars detect are never disparate episodes, like the examples above. The human driver manoeuvres amongst hundreds of other vehicles, pedestrians, roadworks, and even the very occasional wild moose crossing the road (Figure 3), using a combination of information perceived by sight and sound, as well as traffic rules, experience, and instinct.

Figure 3. A question to moose – or muse over: If the moose crosses your AV’s path in the dark of night, will it freeze in the vehicle’s headlights, or would the long-range radar have provided ample warning and slowed to a stop at a respectful distance?

Similarly, the autonomous vehicle relies on accurate data from the radar sensors and other systems, including camera, lidar, and vehicle-to-everything or V2X systems, to detect the traffic environment. The different data streams communicate with the ADAS or autonomous driving algorithms which help to perceive the relative position and speed of the detected vehicles or objects. Control algorithms in the ADAS / autonomous driving systems then help to trigger either passive responses, like flashing warning lights to alert the driver of a blind spot danger, or active responses, such as applying emergency brakes to avoid a collision.

Putting Automotive Radar Detections to the Test

Currently, automakers and radar module providers test the functionality of their radar modules using both software and hardware. There are two key methods for hardware-based tests:

- Using corner reflectors that are placed at different distances and angles from the radar device under test (DUT), with each reflector representing a static target. When a change of this static scenario is needed, the corner reflectors must be physically moved to their new positions.

- Using a radar target simulator (RTS) that enables an electronic simulation of the radar targets, thus allowing for both static and dynamic targets, along with simulating the target’s distance, velocity, and size. The shortcomings of RTS-based functional testing arise for complex / realistic scenarios with more than 32 targets. RTS-based testing also cannot characterise 4D and imaging radar capabilities for detecting extended objects, which are objects represented by point clouds instead of just one reflection.

Figure 4. Testing radar sensors with radar target simulation does not provide a complete traffic scene for validating autonomous driving applications.

Testing radar units against a limited number of objects delivers an incomplete view of driving scenarios for AVs. It masks the complexity of the real world, especially in urban areas with different intersections and turning scenarios involving pedestrians, cyclists, and electric scooters.

Smartening Up Radar Algorithms

Increasingly, machine learning is helping developers train their ADAS algorithms to better interpret and classify data from radar sensors and other sensor systems. More recently, the term YOLO has surfaced amongst headlines on automotive radar algorithms. The acronym stands for ‘you only look once’. This acronym is apt, given what the radar perceives and how the ADAS algorithms interpret the data are mission-critical processes, which can be a matter of life or death. YOLO-based radar target detection aims to accurately detect and classify multiple objects simultaneously.

It's crucial to subject both the physical radar sensors and the ADAS algorithms to rigorous testing before these self-driving systems move onto the final costly road testing stage. To create a more realistic 360o view of varying real-world traffic scenarios, automakers have started using radar scene emulation technology to bring the road to the lab.

One of the key challenges of moving to Level 4 and 5 autonomous driving is the ability to distinguish between dynamic obstacles on the road and autonomously deciding on the course of action vs just raising a flag or flashing a warning on the dashboard. In emulating a traffic scenario, too few points per object may cause a radar to erroneously detect closely spaced targets as a single entity. This makes it difficult to fully test not just the sensor but also the algorithms and decisions that rely on data streaming from the radar sensor.

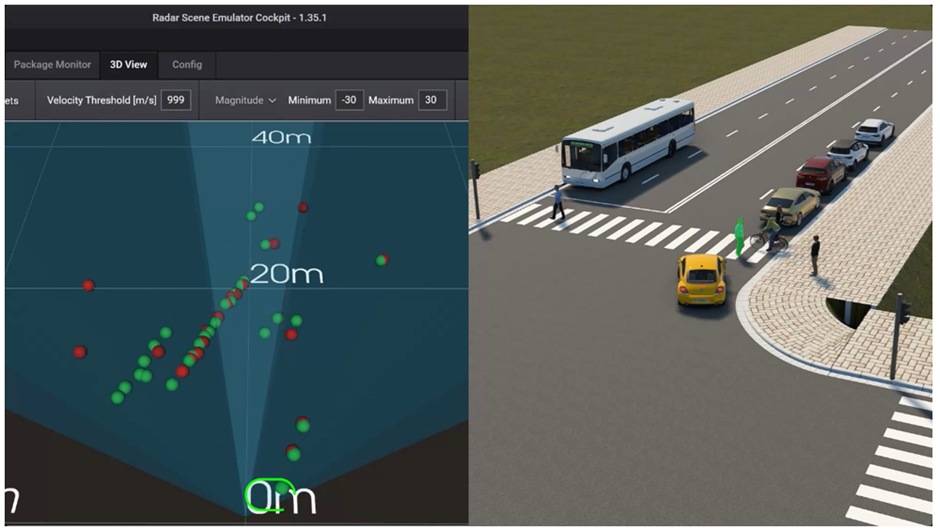

A new radar scene emulation technology uses ray tracing and point clouds to extract the relevant data from highly realistic simulated traffic scenes and provides better detection and differentiation of objects (see Figure 5). Using novel millimetre wave (mmWave) over-the-air technology, the radar scene emulator can generate multiple static and dynamic targets from as close as 1.5 meters to as far away as 300 meters, and with velocities of 0 to 400 km/h for short, medium, and long-range automotive radars. This provides a much more realistic traffic scenario against which the radar sensors can be tested.

Figure 5. A screen capture of perception algorithm testing using radar scene emulation. The right screen shows a simulated traffic scenario which is emulated by the radar scene emulator on the left. The green dots represent emulated radar reflections while the red dots show radar sensor detections.

Radar scene emulation is extremely useful for pre-roadway drive tests as both radar sensors and the algorithms can undergo numerous design iterations quickly to fix bugs and finetune designs. Apart from ADAS and autonomous driving functional testing, it can help automakers with variant handling applications, such as validating the effect of different bumper designs, paintwork, and radar module positioning on radar functions.

For autonomous driving platform providers and radar systems manufacturers, enhancing the vehicle’s perception of different realistic traffic scenes through multiple repeatable and customisable scenarios can allow the radar sensors to capture vast amounts of data for machine learning by autonomous driving algorithms.

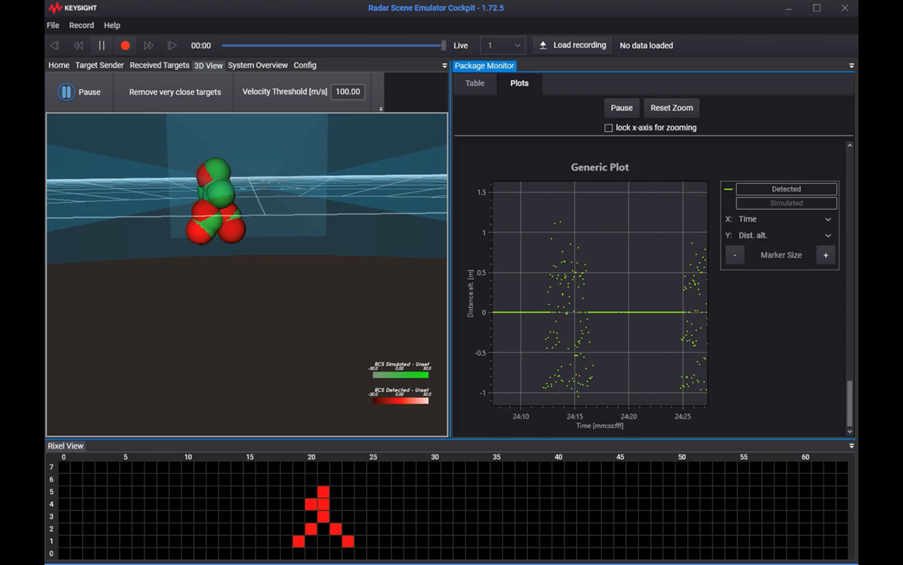

Nowadays, advanced digital signal processing (DSP) also plays a crucial role in enabling finetuning of individual radar detections. For instance, the radar can pick up different points of a pedestrian’s arms and legs, including the velocity, distance, cross section (size), and angle (both horizontally and vertically), as illustrated in Figure 6. This provides vital information for training radar algorithms to identify pedestrians, versus the digital 4D form factor of say, a dog, crossing the road.

Figure 6. Advanced digital processing with radar scene emulation provides greater granularity of dynamic targets, such as a moving pedestrian.

The Road to Super Sensors Begins with Reliable Testing

From chip design to its fabrication and subsequent radar module testing, each step of the automotive radar design, development, and fabrication lifecycle demands rigorous testing.

There are many test challenges when working with mmWave frequencies for automotive radar applications. Engineers need to consider the test setup, ensure the test equipment can carry out ultra-wideband mmWave measurements, mitigate signal-to-noise ratio loss, and meet emerging standards requirements by different market regions for interference testing.

At the radar module level, testing of modern 4D and imaging radar modules requires test equipment that can provide more bandwidth and better distance accuracy.

Finally, the ultimate challenge is integrating the automotive radar into the ADAS and automated driving system and subjecting the algorithms from standard driving situations to the one-in-a-million corner case. The well-trained and tested radar super sensor system will ensure smoother and safer rides for passengers as more drivers take a back seat in the future.

About the Author

Hwee Yng is an advocate for clean tech innovations and works with Keysight’s e-mobility design and test solutions team to connect end-users in this complex energy ecosystem with solutions to enable their next innovation. She is a solutions marketing manager with Keysight’s automotive and energy portfolio.

Before joining Keysight and formerly Agilent Technologies, Hwee Yng was a news journalist with a passion for the environmental and sustainability beat. She graduated from the National University of Singapore with an Honors degree in Botany, with special interest in ecology. In her free time, Hwee Yng works with NGOs in the Philippines to support low-cost off-grid electrification and livelihood technical training programs.